In the war on deepfake fraudsters, banks should aim their fire where they know it will take them down – by stopping fraudulent transactions, writes Sandy Lavorel

We know professional criminals are smart, inventive and quick. Put all that together with generative AI and it spells deep trouble. Deepfake trouble.

Deepfakes use artificial intelligence to simulate someone’s voice or appearance. Today, many involve criminals cloning celebrities, politicians and high-flying executives and manipulating their voice or image in some way.

Just this month, crypto currency exchange Ripple’s CEO appeared in a video apparently urging people to transfer up to 500,000 XRP (about €305,000) to an address and get double back within a minute. The video said such largesse was Ripple’s way of “giving back to the community” for their support. Of course, it was a deepfake and anyone taken in lost their money.

The fraudsters aren’t just using deepfakes to imitate high-profile people – they are already using these techniques to impersonate the public.

Spotting deepfakes is a losing battle

Criminals need just three seconds of a recorded voice to clone it using Microsoft’s VALL-E generative AI software. And it’s pretty easy for them to find three seconds of almost anyone speaking thanks to social media. That means it won’t be long before they’re mass-targeting individuals, too.

The risks associated with deepfake fraud in terms of reputation and financial loss to banks are huge. As it becomes more common, more of their customers are going to get burnt. For private banks, where the personal relationship is part of the sales package and phone conversations authorizing transactions the norm, the stakes are particularly high.

All this means being able to stop deepfake fraud is rightly attracting a lot of attention – and investment. There are watermark schemes that put a stamp on bona fide communications; there is software that looks for implausible attributes associated with deepfakes, from odd lighting and shadows to how the mouth moves; there are projects that examine the content and context of a video or recording and how it’s shared to assess the risk; and there is software that checks the likelihood of a picture or video having been manipulated.

All enjoy some success – today. But we know that an arms race exists between the criminals and the good guys, and the criminals are quick to adapt their techniques and technology to sidestep whatever the good guys develop to try to stop them.

A bulletproof solution

Rather than enter this phony war, aimed at spotting the deepfakes, it’s surely better to focus on something that can’t be faked – the account holder’s normal behavior. If we know the customer well enough to have built a digital profile, anything outside that profile should trigger a red flag and a pause during which the transaction can be investigated. The red flag could be a new destination account; an unusual amount; activity at an unlikely time of day; or even a different login. These markers cannot be faked, and all transactions have them. It’s just a question of knowing what to look for.

Identifying red-flag operations is what NetGuardians has been doing since 2011. Today, its AI powered anti-fraud and anti-money-laundering transaction monitoring solutions protect more than 100 million individuals worldwide and monitor billions of transactions in real-time. It uses machine learning and 3D artificial intelligence to build profiles of each bank customer. It then compares every transaction made by the customer against their profile. Any irregularity triggers an alert that identifies the issue for easy investigation.

|

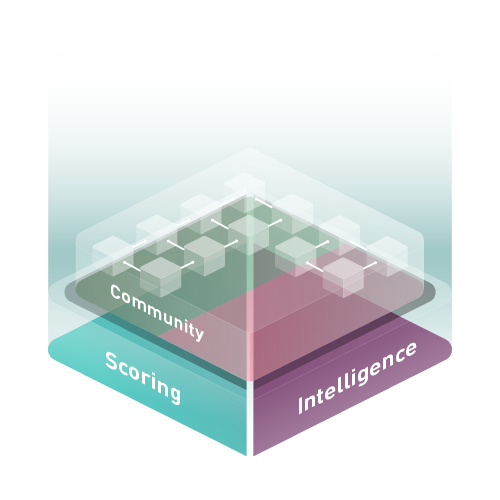

NetGuardians’ Community Scoring & Intelligence (CS&I) solution adds another line of defense, ensuring that the criminals do not benefit from their ill-gotten gains. This solution allows a consortium of banks to securely receive and share anonymized insights and incorporate additional risk scores. This approach further improves fraud detection rates and identifies bank accounts used for money laundering. |

So easy is the investigation that banks using the software have reduced customer friction by 85 percent. It also means lower operating costs – more than 75 percent lower. But critically, the software also spots more fraud and new fraud types.

Results like this protect not just customers’ money and the bank’s reputation, but also customers’ peace of mind. The emotional trauma of falling for a deepfake cannot be underestimated. Shame and guilt can plague the innocent victims, causing depression and worse.

We are all potential victims of financial fraud. Analyzing transaction data and payments remains the most fail-safe way to stop it. With deepfakes, that’s no different. The challenge isn’t how to spot the interloper, but how to stop the transaction.

Sandy Lavorel is the Head of Fraud Intelligence at NetGuardians